Windows Agent Arena: a benchmark for AI agents acting on your computer

We built a scalable open-sourced framework to test and develop AI agents that can reason, plan and act on a PC using language models

AI assistants like Copilot and ChatGPT have become useful tools to millions of users at work and at home, using Large language models (LLMs) to help us with tasks ranging from debugging code all the way to brainstorming dinner recipes. As LLMs become more capable, what should we expect from our AI assistants? At Microsoft we are researching what it would take to develop the next wave of models which can not only reason, but also plan and act to help us. We are excited about the potential of AI agents to improve our productivity and software accessibility by being able to, for example, book vacations, edit documents, or file an expense report.

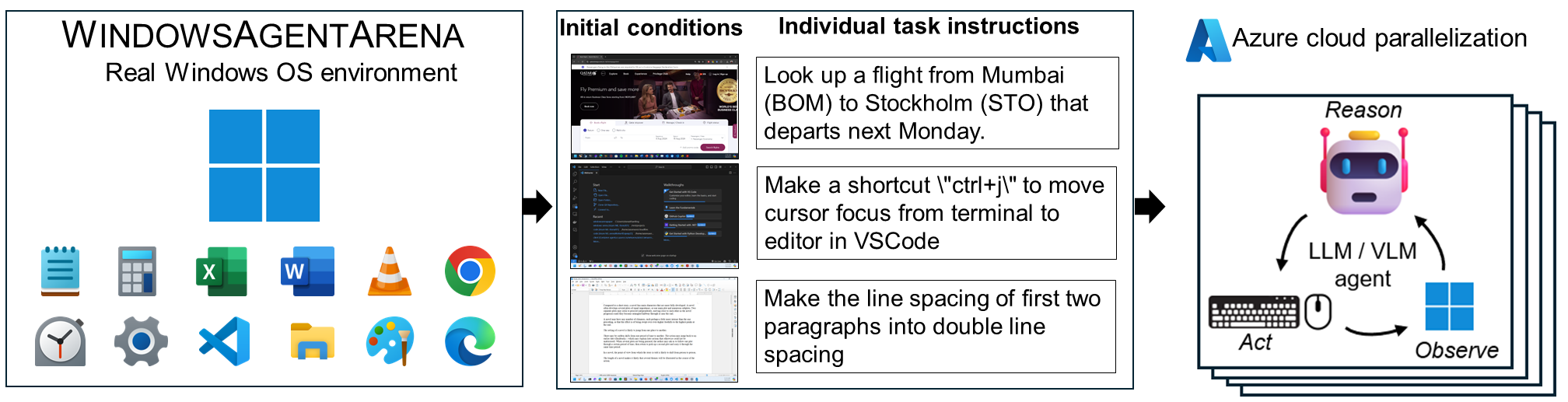

We are proud to introduce Windows Agent Arena, an open-sourced benchmark that will allow researchers in academia and industry to develop, test and compare AI agents for Windows. We can evaluate models across a diverse range of tasks in a real OS using the same applications, tools, and web browsers available to us.

What is a computer agent?

In the broadest sense, an agent is anything that senses its environment, reasons, and acts on it. When it comes to computer agents, this means understanding the current screen and then clicking, typing, and opening apps which might help a user accomplish their objective. Computer agents are multi-modal, making sense of images and text with large language and vision models.

Windows Agent Arena benchmark

Many initiatives across industry and academia are actively researching strategies to create autonomous agents that can complete tasks on behalf of humans. For example, Microsoft recently released UFO an agent capable of UI control in Windows. Prototyping agents is not easy, as it requires a repeatable, robust, and secure benchmark. We find different examples of benchmarks for web tasks (Visual Web Arena), mobile (Android World) and computers (OS World).

Windows Agent Arena extends the OS World platform, which is primarily focused on Linux systems, towards a wide range of tasks on Windows OS. In total we offer 154 tasks across browsers, documents, video, coding and apps (Notepad, Paint, File Explorer, Clock, and Settings).

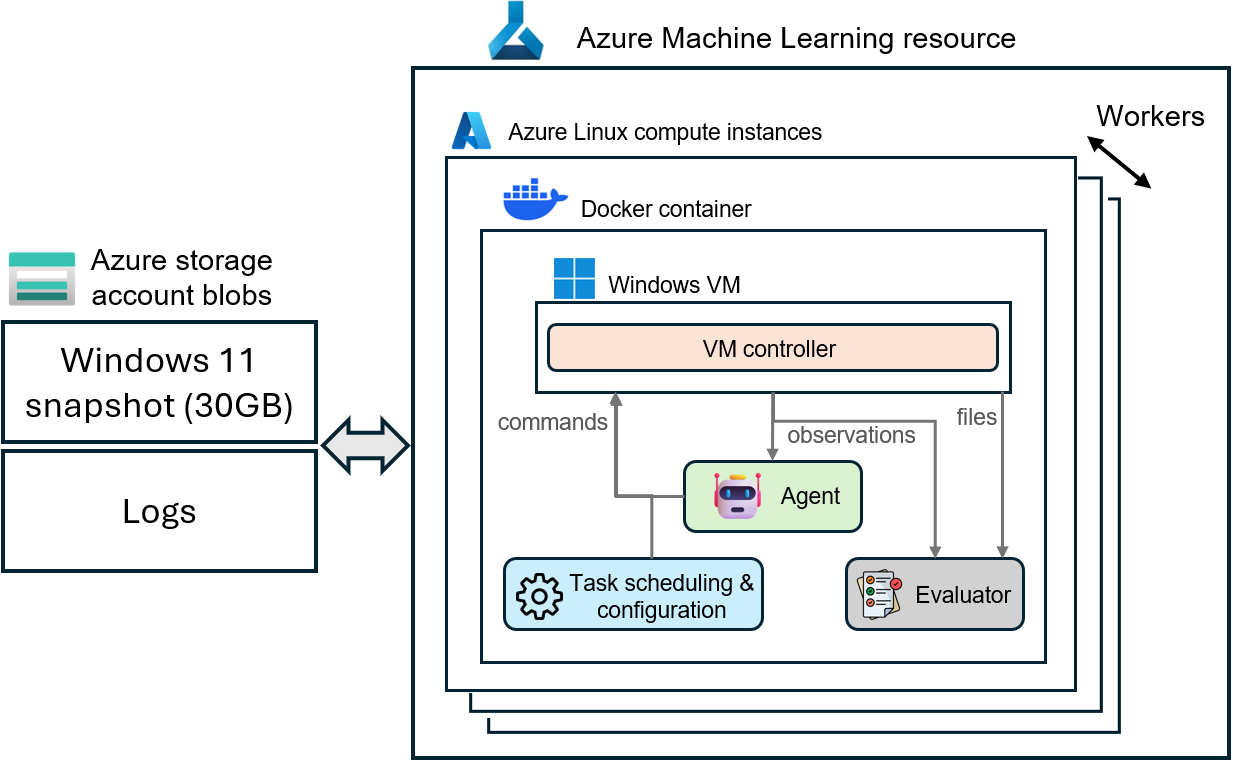

Another major feature of our benchmark is cloud parallelization. Instead of taking days to evaluate an agent by running tasks in series in a development machine, we allow easy integration with the Azure cloud. A researcher can deploy hundreds of agents in parallel, accelerating results to a matter of minutes, not days.

Getting started with Windows Agent Arena is easy: you can clone our repository and test your agent locally first before scaling up your experiments.

What can agents do today?

Our technical report dives deep into the capabilities of generalist agents for Windows. We tested multiple models for screen understanding and reasoning on the Windows Agent Arena, and our best agent so far solves 19.5% of tasks (no points for partial completion), while a human scores 74.5% without external help. We find a large variance between domains, with approximately one third of browser, settings and video tasks successfully completed, while most Office tasks fail.

We use Omniparser, a model developed by Microsoft, to parse the screenshot pixels into text, icon and image regions. We then send the pre-processed information to a cloud model, GPT-4V, and extract the exact commands to be called on the target computer.

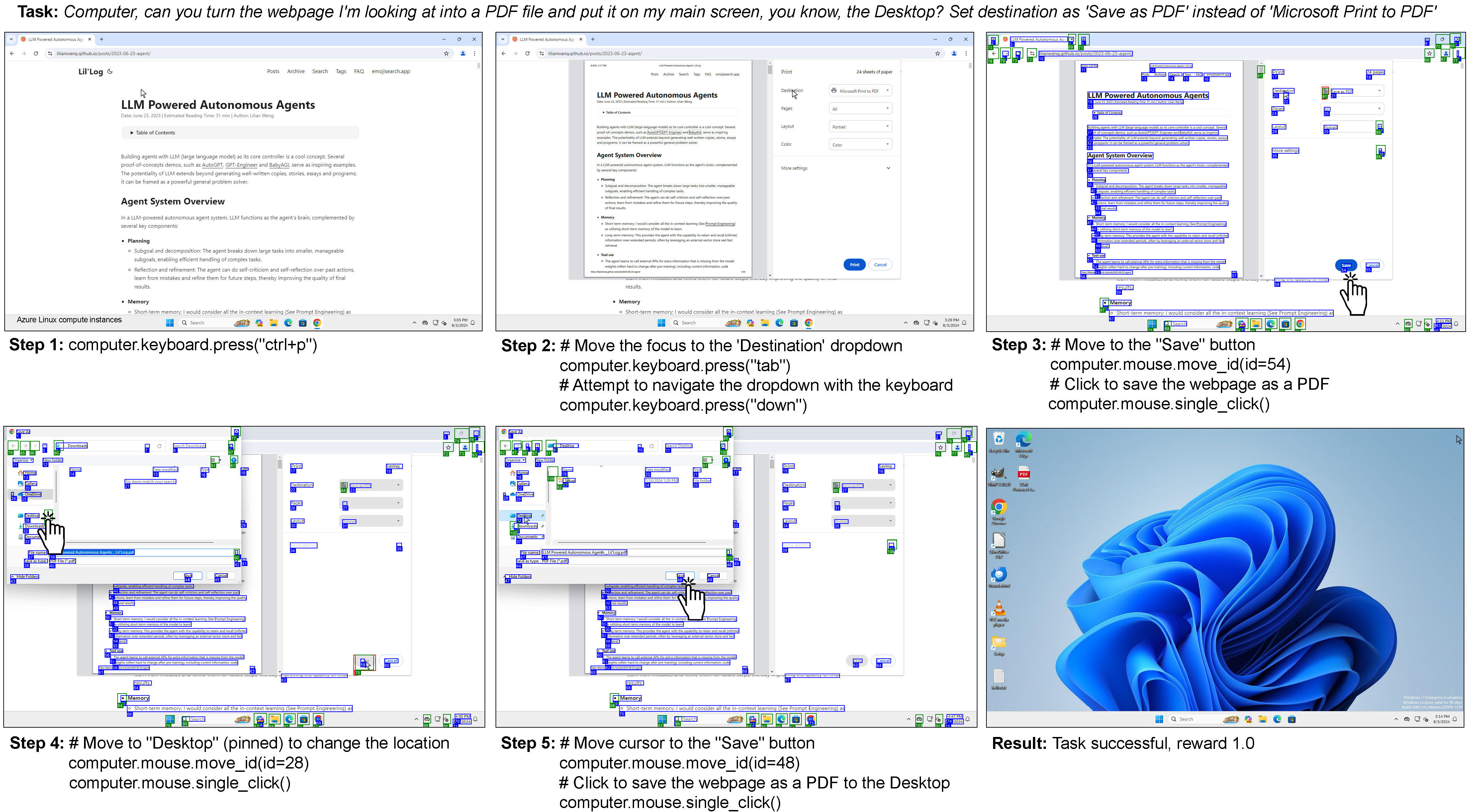

Check out some successful examples below:

Agents are not perfect, and we also find different examples of mistakes due to errors in low-level action execution or reasoning:

Responsible AI and ethical considerations

As we design and improve AI agents to perform complex tasks on computers, it is essential to operate within a framework of ethical guidelines and responsible AI use. From the onset, our team has been conscious of the potential risks and challenges posed by these technologies.

Privacy and safety are a paramount concern. As research groups develop and test these models, we must ensure that AI agents do not engage in any form of unauthorized access or information leak of personal information, thus minimizing potential security risks. We believe that users should be able to easily understand, direct, and override the actions of the AI when necessary.

As we continue our work in this exciting area, we remain committed to building AI technologies that respect user privacy, promote fairness, and contribute positively to society.

Applied Sciences

Applied Sciences