Autostereo 'Magic Window'

3D for videoconferencing collaboration

Frequently our personal interactions in work-based teleconferencing center around a combination of data and people. A common scenario is a 1:1 videoconference to collaborate over a specific piece of work or collection of data. In a typical PC videoconferencing application, the data is usually presented in one window and the remote user in a separate window, often a small one located to one side of the main display.

This project creates an experience that is closer to real-world human interaction and so generates a greater sense of participation and collaboration than the typical scenario. It enables two users to face each other whilst collaborating on a common document that appears to be displayed on a transparent window interposed between them. The users are displayed approximately life-size, and the remote view can be panned around according to the local viewing position – much like viewing a scene through a real window.

Implementation

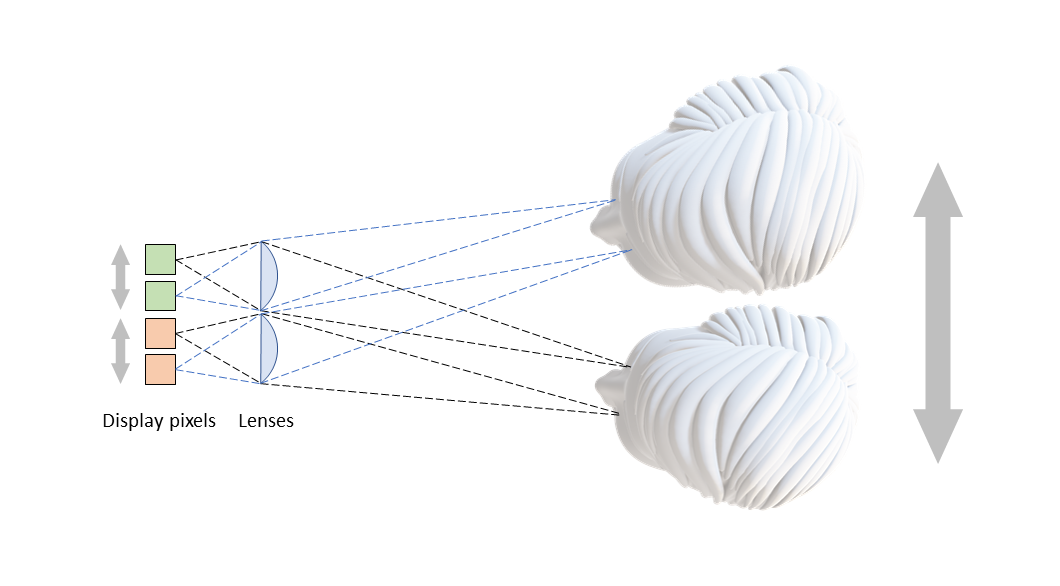

Our implementation uses an autostereo display; autostereo displays create images that are perceived as 3D but without the use of special glasses. This display uses eye tracking in combination with a lenticular structure to send left-right images from a stereo image pair to the eyes of the viewer.

In our application we use this capability to create two separate image planes: a workspace plane that contains the data or document under revision and a plane containing the remote participant in the videoconference. The workspace is rendered in the plane of the display and a touch sensor overlay enables user interaction with the workspace for the usual editing and manipulation tasks, each participant seeing a common view of the workspace. The remote participant is rendered in a fixed 2D plane approximately 1 meter behind the display – a comfortable interpersonal distance for user interactions. The user therefore sees a spatial separation between the data and the remote participant. Why not render the remote user in full stereo 3D since the display is 3D capable? We found, through user experiments, that the cognitive load with a full 3D remote image together with the workspace was too demanding on the viewer. The 2D plane is less visually taxing on the viewer.

The camera used to relay the participant’s image has a low-distortion lens having a wider than normal view-angle. The camera image is cropped to a smaller size and the center of the crop is moved up-down and left-right according to the viewer’s head position. This enables the viewer to look around the remote scene whilst maintaining an appropriately life-sized view of the remote participant.

Applied Sciences

Applied Sciences